AI Evaluation

Overview of the Evaluation Function

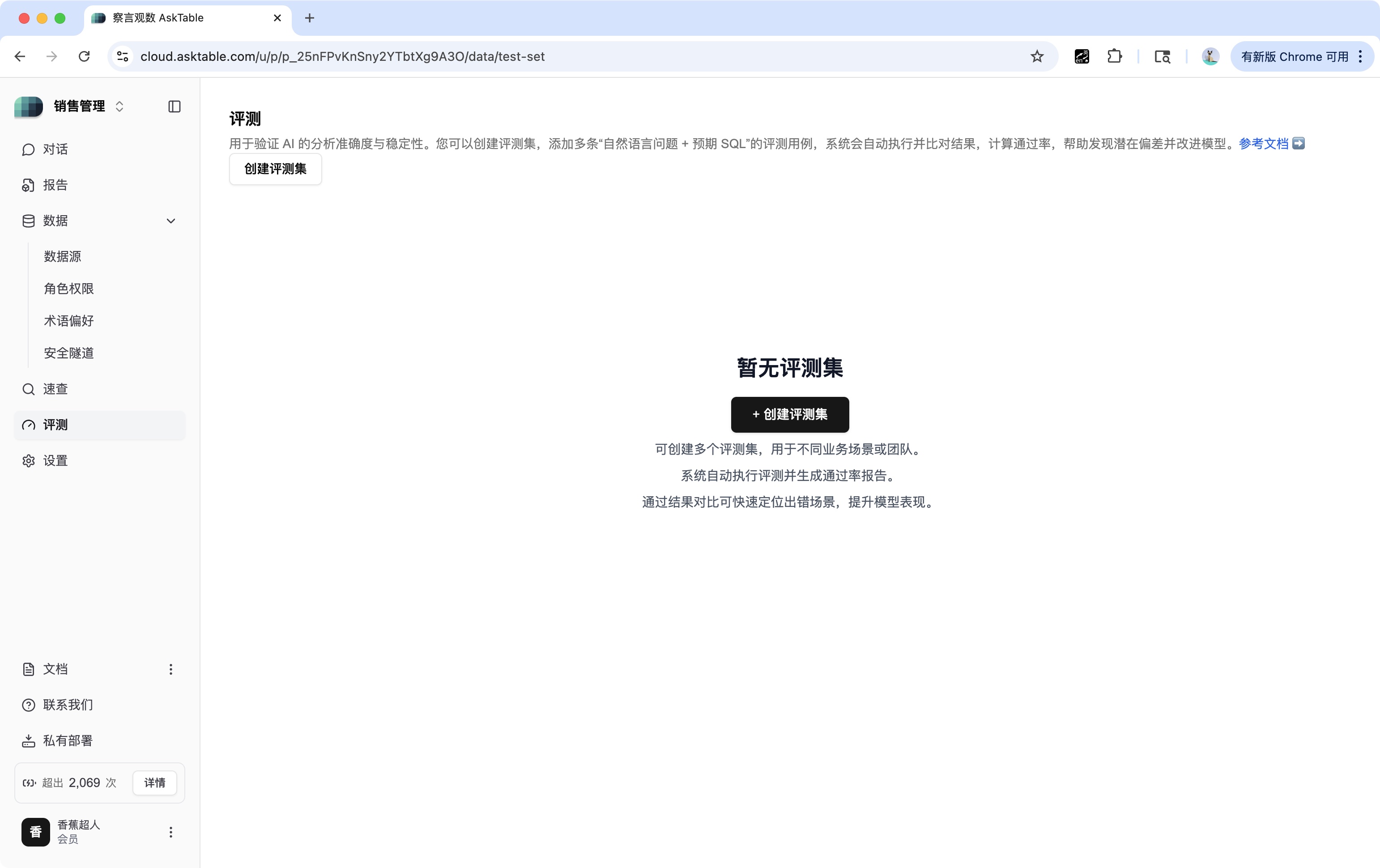

The "Evaluation" feature is used to verify the accuracy and stability of AskTable AI. By creating an evaluation set, you can add multiple test cases consisting of "natural language questions + expected SQL". The system will automatically execute the evaluation tasks, comparing the output of the AI model with your standard answers. The system will calculate the pass rate, helping you identify potential biases and improve the model's performance.

Core Value

- System Validation: Verify the accuracy of AI in complex or critical business issues.

- Efficiency Improvement: The system automatically performs evaluations and generates reports, eliminating the need for manual comparison.

- Improvement Guidance: Quickly locate error scenarios through result comparisons to enhance model performance.

- Multi-Scenario Support: You can create multiple evaluation sets for different business scenarios or teams.